Otniel-Bogdan Mercea

Since May 2025, I am a Machine Learning Researcher at Apple, working on multimodal learning.

I earned my PhD in Computer Science with a specialization in AI in 2025 (thesis submitted in 2024) at the Max Planck Institute for Intelligent Systems and the University of Tubingen, supervised by Prof. Zeynep Akata and Prof. Andreas Geiger within the IMPRS-IS doctoral program. I was also a guest PhD student at Helmholtz Munich and Technical University of Munich, supervised by Prof. Zeynep Akata. I earned my MSc in AI from the University of Edinburgh (2020), with a thesis supervised by Prof. Amos Storkey, and my BEng in Computers and Information technology from Politehnica University of Timisoara (2019), with a thesis supervised by Prof. Calin-Adrian Popa.

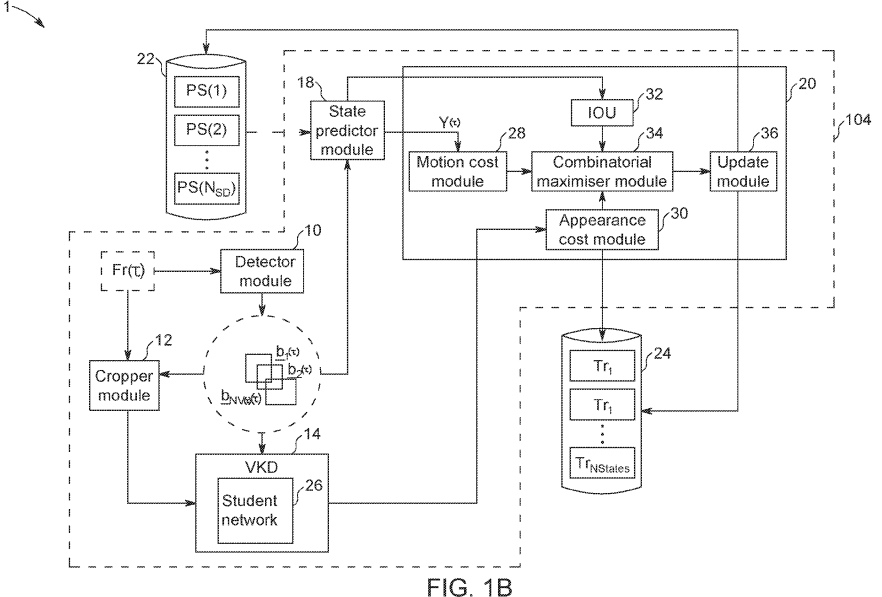

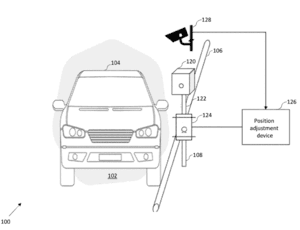

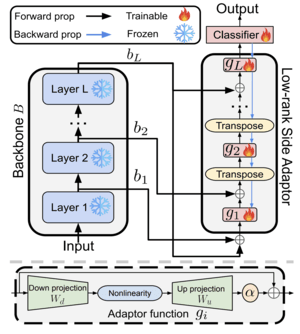

I applied my research expertise in industry through impactful roles, including internships at Google DeepMind (2024), supervised by Stefano Pellegrini, Jasper Uijlings, and Cordelia Schmid, on improving SAM 2 for significant occlusion and movement scenarios; and Google Research (2023–2024), collaborating with Anurag Arnab, Alexey Gritsenko, and Cordelia Schmid on efficient adaptation of large-scale models. I also collaborated with Aleksandra Nowak, Utku Evci, and Yann Dauphin from Google DeepMind on related projects. Previously, I was a ML Researcher at Everseen (2020-2021), developing real-time multi-camera tracking systems.

My research focuses on improving the efficiency of deep learning through data-efficient (low-shot) and model-efficient adaptation methods for large-scale models. I also have extensive experience in multimodal learning (audio-visual, multimodal language models) and video semantic segmentation and tracking.

News

| May 07, 2025 | Thrilled to begin my journey at Apple as a Machine Learning Researcher. |

|---|---|

| Apr 16, 2025 | I am pleased to share that I have successfully defended my PhD dissertation. |

| Dec 27, 2024 | I have finished my 4 month internship at Google DeepMind. I have worked on enhancing SAM 2 for scenarios involving significant occlusion or movement, under the supervision of Stefano Pellegrini, Jasper Uijlings and Cordelia Schmid. |

| Oct 21, 2024 | New preprint is available on optimal adapter placement for efficient transfer learning. |

| Apr 04, 2024 | Our work on audio-visual GZSL using large multi-modal models was accepted at CVPR 2024 workshops (L3D-IVU). |

| Mar 22, 2024 | After 8 months, I finished my internship at Google Research. I have worked on efficient adaptation under the supervision of Anurag Arnab, Alexey Gritsenko and Cordelia Schmid. |

| Feb 27, 2024 | LoSA was accepted as a HIGHLIGHT at CVPR 2024. |

Selected publications

-

Time-Memory-and Parameter-Efficient Visual AdaptationHIGHLIGHT @ CVPR, 2024Seattle, USA

Time-Memory-and Parameter-Efficient Visual AdaptationHIGHLIGHT @ CVPR, 2024Seattle, USA -

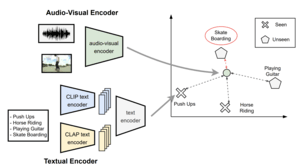

Audio-Visual Generalized Zero-Shot Learning using Pre-Trained Large Multi-Modal ModelsCVPRW, 2024Seattle, USA

Audio-Visual Generalized Zero-Shot Learning using Pre-Trained Large Multi-Modal ModelsCVPRW, 2024Seattle, USA -

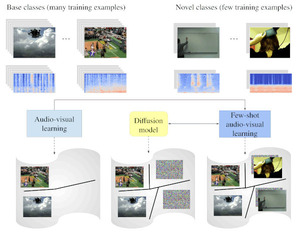

Text-to-feature diffusion for audio-visual few-shot learningDAGM GCPR, 2023Heidelberg, Germany

Text-to-feature diffusion for audio-visual few-shot learningDAGM GCPR, 2023Heidelberg, Germany -

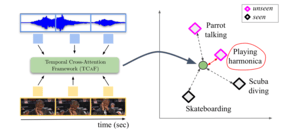

Temporal and cross-modal attention for audio-visual zero-shot learningECCV, 2022Tel Aviv, Israel

Temporal and cross-modal attention for audio-visual zero-shot learningECCV, 2022Tel Aviv, Israel -

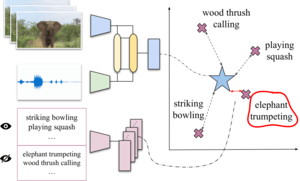

Audio-visual generalised zero-shot learning with cross-modal attention and languageCVPR, 2022New Orleans, USA

Audio-visual generalised zero-shot learning with cross-modal attention and languageCVPR, 2022New Orleans, USA